Representative image

| Photo Credit: The Hindu

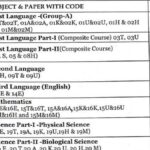

The Supreme Court on Monday (November 10, 2025) agreed to list after a fortnight a petition highlighting that indiscriminate use of Generative Artificial Intelligence (GenAI) in judicial work can lead to “hallucinations”, resulting in fictitious judgments, research material, and worse still, even perpetuate bias.

Chief Justice of India B.R. Gavai, before whose Bench the case came up, reacted that the judges were aware and vocal about the incursions made by AI in judicial functioning.

Kartikeya Rawal, an advocate represented by his counsel, Abhinav Shrivastava, urged the top court to formulate a strict policy or, at least, frame guidelines for regulated, transparent, secure and uniform use of GenAI in courts, tribunals and other quasi-judicial bodies until a law was put in place.

Raises concerns

The petition warned the opaque use of AI and Machine Learning technologies in the judicial system and governance would trigger constitutional and human rights concerns. The judiciary must avail only data free from bias, and the ownership of that data must be transparent enough to ensure stakeholders’ liability.

“The skill of GenAI to leverage advanced neural networks and unsupervised learning to generate new data, uncover hidden patterns, and automate complex processes can lead to ‘hallucinations’, resulting in fake case laws, AI bias, and lengthy observations… This process of hallucinations would mean that GenAI would not be based on precedents but on a law that might not even exist,” the petition submitted.

GenAI was capable of producing original content based on prompts or query. It could create realistic images, generate content such as graphics and text, answer questions, explain complex concepts, and convert language into code, Mr. Rawal said.

Further, he submitted that GenAI algorithms could also “replicate, perpetuate, aggravate” pre-existing biases, discrimination, and stereotypical practices, raising profound ethical and legal challenges.

Fluctuations of AI

The petition pointed out that judicial reasoning and decisions cannot be left to the fluctuations of AI, the public have a right to know the reasoning behind judgments. Judicial reasoning and sources cannot be arbitrary, but transparent. Mr. Rawal submitted that AI may assist in administrative efficiency, but it cannot replace the “prudence, moral judgment, and human discretion essential to judicial decision-making”.

“For the safe and constitutionally compliant deployment of GenAI in the justice system, it is essential that judicial operators maintain a ‘human in the loop’ principle, ensuring that adequately trained professionals exercise supervisory control and validate GenAI-generated outputs,” the petition contended.

It flagged that one of the biggest dangers of integrating AI with judicial work was data opaqueness, largely due to the ‘black box algorithms’ employed in GenAI.

“The term ‘black box’ is used to denote a technological system that is inherently opaque, whose inner workings or underlying logic are not properly comprehended, or whose outputs and effects cannot be explained. This can make it extremely difficult to detect flawed outputs, particularly in GenAI systems that discover patterns in the underlying data in an unsupervised manner. The opacity of such algorithms means that even their creators may not fully understand the internal logic, creating the risk of arbitrariness and discrimination,” the petition noted.

Published – November 10, 2025 08:40 pm IST