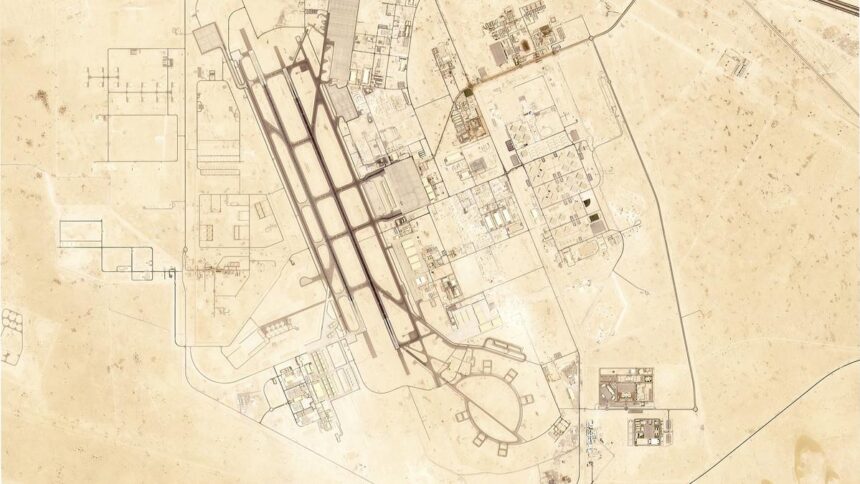

People leave the Supreme Court of Victoria in Melbourne on August 15, 2025.

| Photo Credit: AP

A senior lawyer in Australia has apologised to a Judge for filing submissions in a murder case that included fake quotes and non-existent case judgments generated by artificial intelligence.

The blunder in the Supreme Court of Victoria state is another in a litany of mishaps AI has caused in justice systems around the world.

Defence lawyer Rishi Nathwani, who holds the prestigious legal title of King’s Counsel, took “full responsibility” for filing incorrect information in submissions in the case of a teenager charged with murder, according to Court documents seen by The Associated Press on Friday (August 15, 2025).

“We are deeply sorry and embarrassed for what occurred,” Mr. Nathwani told Justice James Elliott on Wednesday (August 13, 2025), on behalf of the defence team.

The AI-generated errors caused a 24-hour delay in resolving a case that Justice Elliott had hoped to conclude on Wednesday (August 13, 2025). Justice Elliott ruled on Thursday (August 14, 2025) that Mr. Nathwani’s client, who cannot be identified because he is a minor, was not guilty of murder because of mental impairment.

“At the risk of understatement, the manner in which these events have unfolded is unsatisfactory,” Justice Elliott told lawyers on Thursday (August 14, 2025).

“The ability of the court to rely upon the accuracy of submissions made by counsel is fundamental to the due administration of justice,” Justice Elliott added.

The fake submissions included fabricated quotes from a speech to the state legislature and non-existent case citations purportedly from the Supreme Court.

The errors were discovered by Justice Elliott’s associates, who couldn’t find the cases and requested that defence lawyers provide copies.

The lawyers admitted the citations “do not exist” and that the submission contained “fictitious quotes,” court documents say.

The lawyers explained they checked that the initial citations were accurate and wrongly assumed the others would also be correct.

The submissions were also sent to prosecutor Daniel Porceddu, who didn’t check their accuracy. The judge noted that the Supreme Court released guidelines last year for how lawyers use AI.

“It is not acceptable for artificial intelligence to be used unless the product of that use is independently and thoroughly verified,” Justice Elliott said.

The court documents do not identify the generative artificial intelligence system used by the lawyers.

In a comparable case in the United States in 2023, a federal judge imposed $5,000 fines on two lawyers and a law firm after ChatGPT was blamed for their submission of fictitious legal research in an aviation injury claim.

Judge P Kevin Castel said they acted in bad faith. But he credited their apologies and remedial steps taken in explaining why harsher sanctions were not necessary to ensure they or others won’t again let artificial intelligence tools prompt them to produce fake legal history in their arguments.

Later that year, more fictitious court rulings invented by AI were cited in legal papers filed by lawyers for Michael Cohen, a former personal lawyer for U.S. President Donald Trump.

Cohen took the blame, saying he didn’t realise that the Google tool he was using for legal research was also capable of so-called AI hallucinations.

Published – August 15, 2025 04:48 pm IST